Sorry, this article is not available in your language, as the promoted event is available in Germany only.

Data Protector 9 – Patch Bundle 9.07 (Build 109)

On 2016/07/05 Hewlett Packard Enterprise released patch bundle 9.07 for Linux, HP-UX and Windows, it includes important changes and new features. For a complete list, please refer to the patch description.

Sam Marsh, product manager for Data Protector, listed some of the new features in the Community Blog – http://community.hpe.com/t5/Backup-and-Governance/HPE-Data-Protector-9-07-is-here/ba-p/6873769

It is strongly recommended to read the full description of the bundle.

If you own a valid support contract you can download the patches from https://softwaresupport.hpe.com. For login you need the HPE Passport account.

Direct link:

– Windows – DPWINBDL_00907 – Patch Bundle

– Linux – DPLNXBDL_00907 – Patch Bundle

– HP-UX – DPUXBDL_00907 – Patch Bundle

Did you know? When you received a hotfix for a previous Data Protector (minor) version, you should make sure the hotfix is included in the patch bundle before applying the patch bundle. This is recommended for customers which may have received the hotfix right before the release of the patch bundle. If your hotfix is not included in the patch bundle open a software case and ask for assistance.

HPE Backup Navigator 9.40

On 2016/06/30 Hewlett Packard Enterprise released the new version of Backup Navigator – version 9.40. The new version includes important changes and new features. HPE Backup Navigator is the reporting and analytics tool for HPE Data Protector.

Information regarding the new version and the binaries can be downloaded here http://www8.hp.com/us/en/software-solutions/data-protector-backup-recovery-software/try-now.html.

RUNBOOK – Migrate Data Protector 7.0x to Data Protector 9.0x

Update 2016/0705: In section installation source it was recommended to use a patched installation source for the upgrade. This method must not be used for upgrades from Data Protector versionen 8.13 and newer, as it will cause serious damages in the internal database (no fix available/possible). For versions older 8.13 it is too recommended not to use a patched installation source due to missing tests by engineering. In this case, please install Data Protector 9.0 first followed by installation of current patch bundle. One exception to use a patched installation source is when doing a new installation (“green field approach” – i.e. Windows 2012 R2 Cluster Cell Server), es there are no depenencies for upgrading internal database.

Data Protector 7.0 was introduced on 16.04.2012 and meanwhile superseded by two major releases. On 30.06.2016 the time has come and the support for Data Protector 7.0x ends. Thus, it is time for all undecided customer to upgrade to the current version – Data Protector 9.06. A big step, as with the upgrade the internal database will change. Many customers have been postponed this step due to uncertainties with the upgrade. Hewlett Packard Enterprise already released in the past two advisories and explained how the upgrade to the new internal database can be performed (please refer to http://h20564.www2.hpe.com/hpsc/doc/public/display?docId=c04350769 and http://h20566.www2.hpe.com/hpsc/doc/public/display?docId=c04937621). However, the change to the new version also offers opportunities, the backup and recovery strategy (e.g. backup to HPE StoreOnce) might be reconsidered, as the current version offers a lot of new features compared to Data Protector 7.0x. Some selected features:

- 3PAR Remote Copy support

- Automated pause and resume of backup jobs

- Accelerated VMware backup through 3PAR snapshot management

- Cached VMware single item recovery direct from HPE 3PAR snapshot or Smart Cache

- VMware Power On and Live Migrate from HPE 3PAR snapshot or Smart Cache

- StoreOnce Catalyst over Fiber Channel and Federated Catalyst support

- Data Domain appliance to appliance replication management

- Automated Replication Synchronization – synchronize metadata of replicated objects between Cell Manager

On the web there are many instructions, but no step-by-step documentation for upgrading to latest version. Therefore, this article deals with the upgrade of Data Protector 7.0x to Data Protector 9.06 and offers all required steps as a runbook.

Some notes before you can start:

- This step-by-step documentation is valid for Cell Manager Installation on Windows 2008 or Windows 2012. It is assumed that the path

C:\Program Files\OmniBackandC:\ProgramData\OmniBackis used. This runbook can also be used for Linux or deviating installation paths. - When migrating (this is a separate and subsequent process) the DCBF files are converted into a new format. The new DCBF format is approximately 1.5 times to 2 times larger than the old format, it is therefore enough free disk space required.

- All existing Data Protector licenses should be regenerated in advance using the new licensing portal https://myenterpriselicense.hpe.com, old OVKEY3 licenses can no longer be used in current Data Protector versions. Of course you can only be migrate licenses, for which there is a maintenance contract.

- Should the upgrade fail despite these detailed instructions, there is always a rollback to Data Protector 7.0x possible. But you need to have your DP 7.0 installation sources and the most recently installed patch bundle available.

- It is recommended to move all used and appendable media into a separate pool so that mixing of to be migrated and new media is avoided.

- The Catalog Migration of DCBF files is a downstream process and can either be performed directly after the upgrade or after a few weeks. If possible plan for no activity on the Cell Server during the catalog migration.

- It is recommended to test the upgrade using a virtual machine. Using the exported Data Protector 7 database it can be easily imported into the test environment.

- With Data Protector 9.0x name resolution is top most priority to avoid problems during or after the migration. It is therefore required that the Cell Manager is always been resolved (FQDN, short and reverse).

- By upgrading to the latest version earlier operating system versions may stop working, as e.g. Windows 2003 is no longer supported. In such a case and if the client is running as a virtual machine, the client can be backed up with the VMware or Hyper-V integration in the future.

- The following steps are only one possible path for migration, there are several ways to upgrade. Thus the success is not guaranteed, because a successful upgrade also depends on the existing environment. This runbook were however already carried out during many successful migrations and thus the instructions should be appropriate for your environment too.

Preparation:

- Checking the consistency of the internal database. There must be no errors appear. If errors are seen you need to address them before the upgrade to DP 9.0x can be continued. To check the IDB use the command:

omnidbcheck -extended

- Cleaning of the old internal database:

- Close Data Protector GUI

omnidbutil -clearomnidb -stripomnidbutil -purge -sessions 90(or correspondingly higher, lower value)omnidbutil -purge -dcbf -forceomnitrig -stopomnistat(no backup job must run)omnidbutil -purge -filenames -force- Open Data Protector GUI

- Monitor -> Current Sessions

- Select the purge session

- Watch progress and wait for the end of the process

- During the purge of filenames no backups can run, scheduled jobs are queued and will only be executed if the purge has been completed. In larger environments, it may be necessary to cancel the purge process and continue at a later time. To stop the purge use the command

omnidbutil -purge_stop. Alternatively, the purge can also be performed selectively for individual clients. - Create directories for the migration:

mkdir C:\migration\mmdbmkdir C:\migration\cdbmkdir C:\migration\othermkdir C:\migration\program files\omnibackmkdir C:\migration\program data\omniback

- Defrag the internal database using export and import

- It must not run any backups, check with the command

omnistat omnidbutil -writedb -mmdb C:\migration\mmdb -cdb C:\migration\cdb- At the end of the export you are prompted to copy some special directories. You need to copy them before the database is re-enabled. These are usually the DCBF and MSG directories, but there might be other directories required to copy. For safety the following directories are copied:

robocopy "C:\ProgramData\OmniBack\DB40\DCBF" C:\migration\other\DCBF *.* /e /r:1 /w:1- Copying may be necessary for further DCBF directories. In this case, the copy operation is continued in line.

robocopy "C:\ProgramData\OmniBack\DB40\msg" C:\migration\other\msg *.* /e /r:1 /w:1robocopy "C:\ProgramData\OmniBack\DB40\meta" C:\migration\other\meta *.* /e /r:1 /w:1robocopy "C:\ProgramData\OmniBack\DB40\vssdb" C:\migration\other\vssdb *.* /e /r:1 /w:1

- Now the exported database can be imported again, the following command is used:

omnidbutil -readdb -mmdb C:\migration\mmdb -cdb C:\migration cdb

- If the command

omnidbinit -forcewas used prior importing the database the DCBF, MSG and META directories need to be copied back into the old location, because the original folders are deleted when the database is initialized. For this, however, the Data Protector services must be stopped. If necessary, additional DCBF directories for the internal database must be created beforehand.

- It must not run any backups, check with the command

- For safety, the

omnidbcheck -extendedis performed again, it must not show any errors. - If necessary, temporary files in

C:\ProgramData\OmniBack\tmpandC:\ProgramData\OmniBack\logcan be removed. But please be beware: not all log files can be deleted. Files as the media.log must be retained, because it might be required in case of recovering data. The media.log provides information when and in what order a media was used during a backup. - Copy/backup the entire Data Protector installation:

omnisv -stoprobocopy "C:\Program Files\OmniBack" C:\migration\programfiles\omniback *.* /e /r:1 /w:1 /purgerobocopy C:\ProgramData\OmniBack C:\migration\programdata\omniback *.* /e /r:1 /w:1 /purgeomnisv -start

Installation sources:

- It is highly recommended to use a patched installation source for the installation. To this end, the following steps are carried out on a temporary Windows server on which no Data Protector installation exists.

- Perform the Data Protector 9.0 installation as an administrator (run as admin) and select the installation of the installation server. No any other components apart from the installation server will be installed.

- Install additional patch bundle and patches after the installation has been completed. Please refer to https://www.data-protector.org/wordpress/2016/04/hpe-data-protector-patch-bundle-9-06-features/ and https://www.data-protector.org/wordpress/2013/06/basics-installation-order-patches/

- The so updated installation can be used as an installation source for the upgrade of the Cell Manager. To this end, all the folders of the depot from the temporary installation server are copied to the Data Protector Cell Manager 7.0x (e.g. to

C:\migration\install). Once done, the temporary installation server can be uninstalled.

Upgrade:

- The upgrade is carried out with the patched installation sources and setup.exe is executed as an administrator (run as admin).

- It is recommended to leave all the proposed defaults during installation and thus perform the upgrade.

- During the upgrade parts of the old database are exported and prepared for import into the new database. This process may take some time depending on the size of the environment; the process should not be interrupted.

- Following this part the installation of Data Protector 9.0x is continued normally.

- After the upgrade it is recommended to review and adjust the configuration files

globalandomnirc. - In addition, the backup specification of the Cell Manager should be checked. During the upgrade to DP 9.06 the existing specification has been divided into an IDB and a filesystem specification.

After the upgrade:

- After the upgrade the DCBF directories should be migrated to the new format. In principle there are two options: the immediate migration of all DCBF files or migration of the DCBF files for long-term backups only.

- The second way is the preferred method, as you wait for the expiration of the short-term protection of the old media and thus these DCBF files will not have to be migrated. After four to six weeks you migrate only media with long-term protection.

- Required steps for both ways:

- Open administrative command line and change into the directory

C:\Program Files\OmniBack\bin - Run the command:

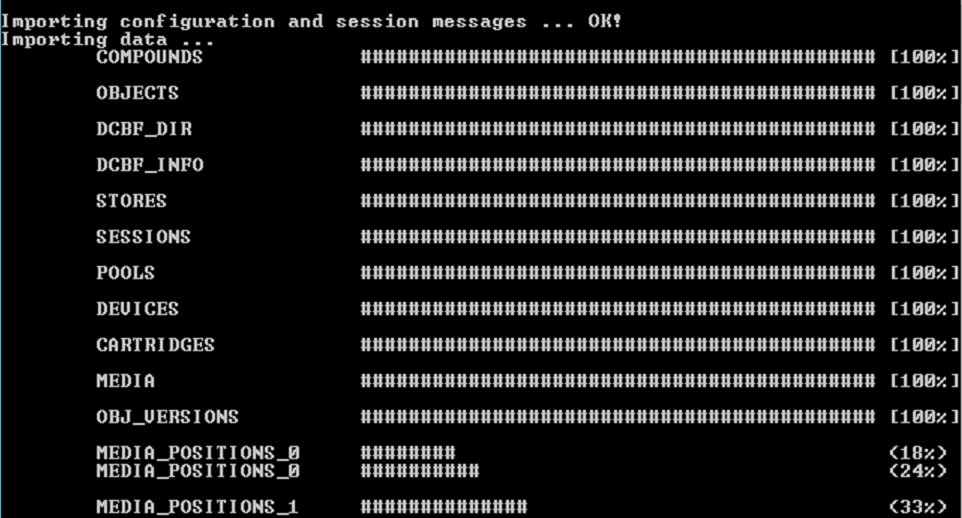

perl omnimigrate.pl -start_catalog_migration - The DCBF migration is very time consuming, however, runs in the background and does not have to be actively monitored.

- After the DCBF migration is done check that all the media have been migrated. For this purpose, the command

perl omnimigrate.pl -report_old_catalogis used. As a result"DCBF (0 files)"is expected. Should still files are displayed, the following steps must not be executed. - If new backups have already run during DCBF migration, it may happen that DCBF 2.0 files were created in the

DB40\DCBF*directories. - The files found in

DB40\DCBF*are moved toC:\ProgramData\OmniBack\server\DB80\DCBF\dcbf1-4. The files can be distributed, in principle it is not necessary to maintain a certain order. - To adapt the change the command

omnidbutil -remap_dcdiris executed after moving the DCBF files. - The old DCBF directories will be removed, the following commands are used:

omnidbutil -remove_dcdir "C:\ProgramData\OmniBack\DB40\DCBF"omnidbutil -remove_dcdir "C:\ProgramData\OmniBack\DB40\dcbf1"omnidbutil -remove_dcdir "C:\ProgramData\OmniBack\DB40\dcbf2"omnidbutil -remove_dcdir "C:\ProgramData\OmniBack\DB40\dcbf3"omnidbutil -remove_dcdir "C:\ProgramData\OmniBack\DB40\dcbf4"

- Depending on the size of the environment less or more DCBF directories may be present and must be removed accordingly.

- With the command

perl omnimigrate.pl -remove_old_catalogthe old catalog is removed. - In the file

C:\ProgramData\OmniBack\Config\server\options\globalthe variableSupportOldDCBF=0should be set, but it can also be removed (default after upgrade is 1). - Now the DB40 path can be deleted –

C:\ProgramData\OmniBack\DB40

- Open administrative command line and change into the directory

Rollback:

- If the upgrade fails, you can rollback at any time, assuming that no migration of files DCBF files began.

- The failed Data Protector 9.0x installation needs to be removed and installation of Data Protector 7.0x and patches to be done.

- The command

omnidbutil -readdb -mmdb C:\migration\mmdb -cdb C:\migration\cdbwill be used to re-import the old database. - Additional directories as DCBF, MSG and META, need to be copied back to the DB40 path.

- The configuration from the previously saved Data Protecor installation (

C:\migration\omniback\programdata) needs to be copied toC:\ProgramData\OmniBack\config\serverso that the initial state is restored.

StoreOnce Performance – measure the theoretical throughput

Update 2016/07/20: The procedure below explains how to measure the throughput for a single stream. If you select two or more files from different mount-points you can measure the performance for multiple streams. The procedure could be useful to assess the bandwidth utilization when using a known number of streams. And when running different tests using source-side and target-side deduplication you can compare the different deduplication methods available in Data Protector. In addition the result might be useful to estimate/calculate how many CPU, RAM and LAN resources would be required on a given gateway.

There is always a challenge for backup administrators to achieve the best performance and throughput during backups. That’s why I wrote many articles on how to test performance for different devices in Data Protector. Tools like “HPE LTT Tools” can be used for tape devices and measure the throughput. The good old HPCreateData and HPReadData usually will be used to measure the throughput on flesystems. So, how can you measure the theoretical throughput to StoreOnce when the Catalyst protocol is used along with HPE Data Protector, and without running a real backup to StoreOnce? The answer is simulating data instead reading data from filessystems. The “how to” below explain the steps required to do the tests. Of course, the measured throughput will differ from what you would get when doing the regular backup.

How To:

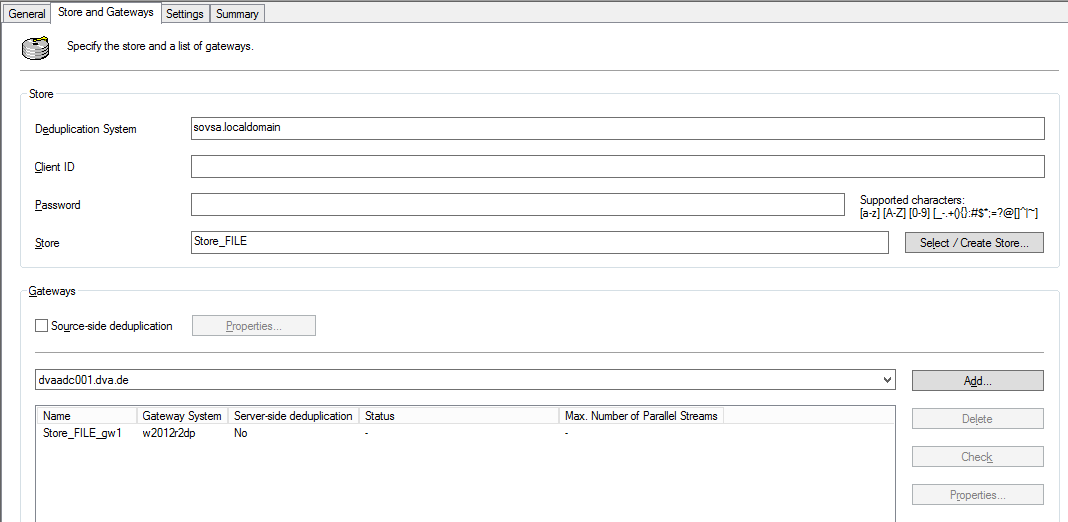

- First you need to have a StoreOnce Gateway created. you should use a new Store during your tests in order to easily delete it afterwards. In addition you should used Target Side Deduplication for testing the performance. See picture as an example – if you need more details on StoreOnce, drop me a mail…

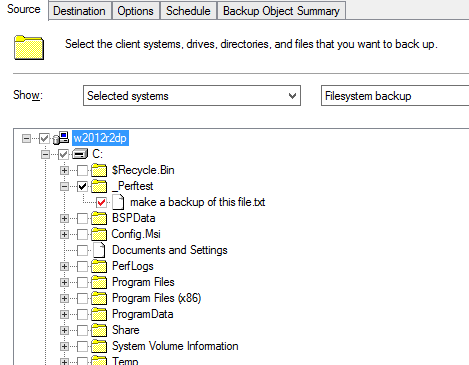

- Now you prepare a new backup specification, you only need to select one single file. As backup target use the device you created in first step. No other options or scheduling required, except for changing the default protection to none.

- On the Media Agent you need to temporarily add and save new parameters to the configuration file

.omnirc/omnirc(Linux/Windows). Please note: use the parameter only during the performance test and make sure no other backups are running at the same time using the specified Media Agent. If you forget to change back, all future “backups” using this Media Agent have to be seen as not successfull.

OB2SIMULATEDATA=1 OB2SIMULATEDFILESIZE=51200

The first parameter will simulate data, the second parameter is the size of the data to be simulated, in this example 50 GB. If you wanna test physical devices, nul devices or file libraries, you could add the additional parameter

OB2BMASTATISTICS=2in order to enable advanced statistics in the session messages. - If you start the created backup specification no data are backed up, instead they are simulated.

[Warning] From: VBDA@w2012r2dp "C:" Time: 24.06.2016 07:44:17 Simulating backup data instead of reading it from object: C:\_Perftest\make a backup of this file.txt

-

Using omnispeed (refer to Downloads) you can calculate the average throughput. During the test I used the StoreOnce Laptop Demo, hence the achieved throughput does not aply to productive environments.

******************************************************************************* * * * OMNISPEED v 2.00 - Average Speed of DP Sessions * * * * CoPyRiGhT (c) 2014 Data-Protector.org - Daniel Braun * * * ******************************************************************************* Starting script on: w2012r2dp - 24.06.2016, 13:25:39 Init environment ... Done. Get details for historic session: 2016/06/24-1 SessionID Type Status GB/h MB/s Dura Drv sum min # =============================================================================== 2016/06/24-1 BACKUP COMPLETED 520.23 147.98 5.77 1